Scale-Out File Server Role and Citrix Provisioning Services vDisk Stores: My Thoughts and Recommendations

Updated: The focus and root cause in this blog post has changed since I initially wrote it. There are tons of good nuggets in the first section, so I decided to leave them so readers could follow my troubleshooting logic…

Over the last couple months I’ve been playing and working extensively with Windows Server 2012 R2. Citrix’s release of XenDesktop 7.1 and PVS 7.1 added full support for Windows Server 2012 R2, so I figured it was time to re-evaluate my current standards and deployment preferences around XenDesktop, Provisioning Services, etc.

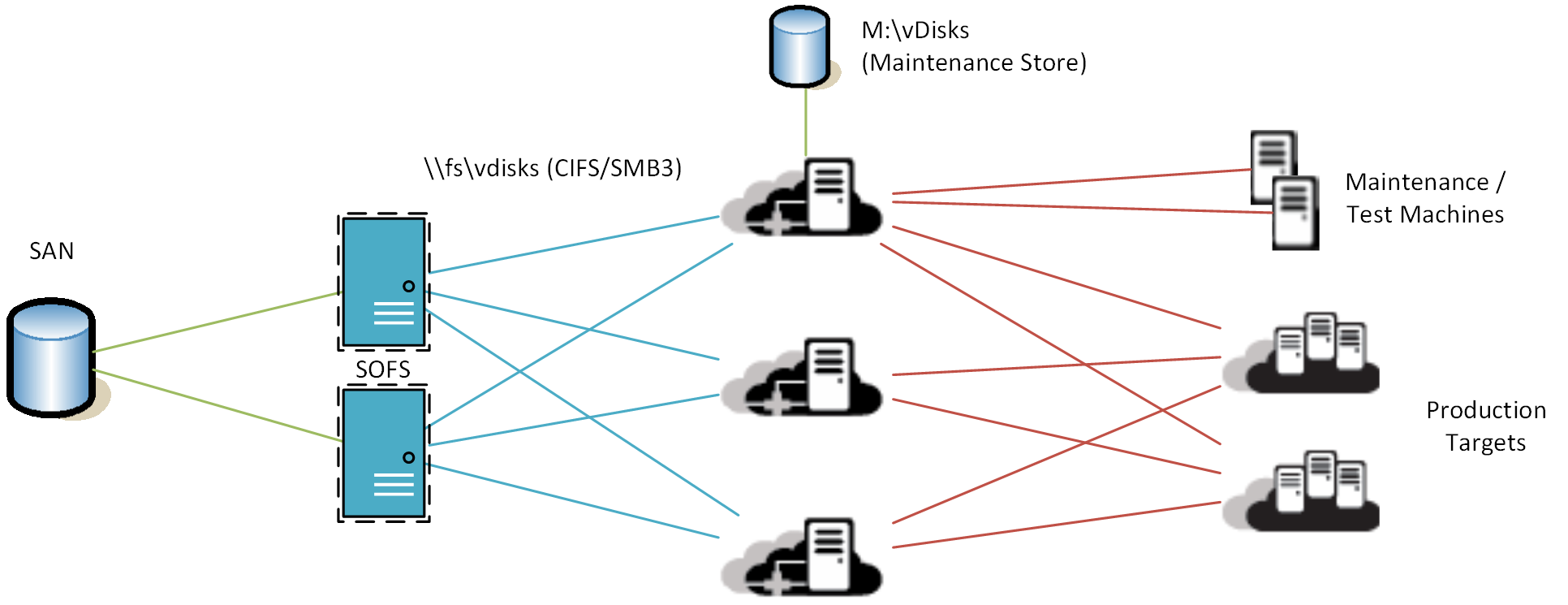

In my current setup, I’m using a CIFS Store provided by a Windows Server 2012 R2 Scale-Out File Server (Failover Clustering) to provide all the SMB3 goodies (and CSV caching) to the PVS servers. Incidentally, this is the same SOFS that I’m using for Citrix profiles and folder redirection.

Aside from a couple bruises, I’ve had pretty good success with Provisioning Services Versioning over the years, so I am using that method for revision management. In my opinion, the issue I’m about to describe would not exist in traditional (aka old school) VHD copy/import revision management approach. It’s worth mentioning, but my PVS VHDs are using 16MB chunk sizes, not 2MB.

Based on my particular setup and in my testing, I’ve discovered one pain point that should be avoided by a minor architectural change: add a block-based Maintenance Store. Call this however you like, a VMDK/VHD attached with a drive letter, an iSCSI attached LUN, or a pass-through volume. Doesn’t matter how you get about it, as long as it’s block based. This Maintenance Store should only be attached to a single Provisioning Server that you will use for maintenance/versioning/etc. Here’s why…

The shared disk provided to the Scale-Out File Server is on a decent performance LUN, and normal file copy operations average 200MB/s. Needless to say, I never imagined I would have performance issues with normal PVS operations.

That being said, a normal file copy operation typically uses a 1MB transfer size as discovered by Process Monitor (See “Length” field):

If you’ve been using PVS Versioning for any length of time, you know that once you create a new version, you must merge! Citrix recommendations no more than 5-7 AVHD files in a chain. Let’s review the AVHD merge process. If this process is completely unfamiliar, I recommend you read up here: http://support.citrix.com/proddocs/topic/provisioning-7/pvs-vdisks-vhd-merge.html

Basically a delta file (AVHD) is created every time you create a new version. These AVHDs need to eventually be merged back to the Base to create a new complete VHD file…

The merge operation is performed by a process called MgmtDaemon.exe in the service account background context. This is good news as it means that you can safely logoff the session that was used to request the merge and it continues in the background. If the Stream service is restarted, or the PVS server reboots for that matter, your version will continue to say “Merging” but the new .vhd file will have disappeared. Not a problem, simply delete that version and start over.

So, let’s get down to the problem of the matter. MgmtDaemon.exe is responsible for merging the base/incremental versions, this process reads in the sectors from the base (VHD) and chained (AVHD) versions, checks to see which is most current, writes to the new base (VHD), and moves on to the next sector to start the whole process over again. Better yet, it does this in 512B transfer sizes. Yes, you read that correctly, Bytes, not Kilobytes, not Megabytes, not Gigabytes…Bytes. If you’re lucky, every once in a while you’ll see a transfer at 4 or 8KB ReadFile operation, but writes are always 512B:

On a block-based disk, this is no problem as it’s pretty well designed to handle many small transfers (512 is quite small in fact considering most transfers are 8x that at 4KB). However, on a TCP/IP connection, this absolutely murders any concept of TCP Window Scaling or other network protocol performance features designed to move large blocks of data.

Remember again how I said normal copy operations are 1MB in transfer size? Keep in mind this is 2,000x the transfer size compared to 512B. Needless to say, you shouldn’t be surprised that the MgmtDaemon.exe process averaged 512KB/s, or 1/400th the speed of a copy operation (200MB/s). If you’ve ever done any troubleshooting of user logon times, you probably know that a user with thousands of 1KB roaming cookies can easily take longer to logon than a user with a 50 (dare I say 500) MB profile. I believe this is unfortunate overhead of the TCP 3-Way Handshake; Syn, Syn Ack, Ack.

So, what’s the big deal. Well, for starters your merge jobs are likely to fail. At best they will simply take a REALLY long time. I would commonly see it hang for an hour or longer for each percentage of completion. In fact, one of my merged base jobs I left over 24 hrs. and it was sitting at 70%. Needless to say, this is a bit embarrassing and would cause anyone to hate PVS Versioning after dealing with this for some time, especially when it doesn’t have to be this way.

Like I mentioned above, a simple architectural change can prevent a world of headache. Here’s what you do…

– Add a new VMDK/VHD to one of your Provisioning Servers. Format and label the volume, create a folder for storing the vDisks.

– Create a Store in the PVS Console called “Maintenance”

– If you’ve already created a bunch of VHDs and AVHDs and need to move them to this store to merge, have no fear, use the Export feature covered here to create the manifest file. Copy the VHDs, AVHDs, and Manifest files over to your Maintenance store. Then, you can import them and merge to a new base.

– Use your CIFS vDisk Store for VHDs that are “in production”. In other words, NEVER use PVS Versioning on vDisks that are in that store. Instead, use the Maintenance store for your maintenance and test VMs. When you’re ready to move a change into production, merge a new base, copy/paste the new base to the CIFS Store and import it for production use.

I know this sounds like a major headache, but trust me the alternative is much worse. In the end, your configuration may look something like this:

In my testing, merging the same VHDs / AVHDs once moved over to the Maintenance Store took less than an hour per vDisk. Hopefully this blog post helps to eliminate some potential architectural/design flaws if you intend to use PVS Versioning with CIFS/SMB shares.

APPENDED 2013.1.5 based on feedback from Brian Olsen

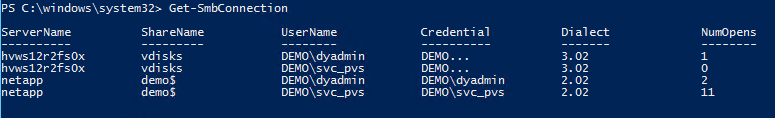

Taking the test a little bit further, I decided to see if this was an issue with Scale-Out File Servers, SMB3, or something else. So, I took one of my vDisks to be merged and copied them to a NetApp Filer running SMB 2.0. I confirmed the Connection to the Filer was SMB 2.0 by running “Get-SMBConnection” from an elevated PowerShell prompt:

The NetApp aggr is backed by fewer, SATA disks, performance wise I only ever get about 60MB/s on copy operations. That being said, all data points lead me to believe that the performance merging would be slower, since the SMB3 SOFS is that much better performance. However, as Brian indicated in his environment, the NetApp w/SMB 2 is not adversely affected, merging operations were averaging about 10-20MB/s, tremendously better than SOFS. Checking Process Monitor, the NetApp transfers were in 512B sizes as well, so perhaps this isn’t an issue at all with the transfer size, but rather some SMB 3.02? Puzzled, I continue to hunt for the answers.

Additional troubleshooting steps include shutting down one of the Scale-Out File Servers, so all connections are forced through a single file server. Disabling Receive Side Scaling, which is enabled by default. Disabling SMB 3 multi-channel which is enabled by default. Disabling Large Send and Task Offload. Disabling Continuous Availability on the Share. Grabbing at straws, I decided to ditch the Scale-Out File Server model and create a traditional Active/Passive File Server role. Lo and behold, this worked like a charm and is rendering 10-20MB/s merge speeds. In the screenshot below, FS01 is my original role using the Scale-Out File Server role, FS02 is a new Active/Passive role:

While I haven’t been able to find any documentation on Scale-Out File Server and Provisioning Services interoperability, I would imagine if pressed, Citrix will say it’s not supported at this time. Hopefully there is an easy solution that I’m simply overlooking. I was even thinking if there’s a way to disable SMB Scale-Out on the SMB Client side so that round-robin would still work, but it would stay pinned to a specific file server once the SMB session was established.

Moral of the story: While I was originally led to believe this was a TCP/IP problem with the billions of tiny 512B packets, this turned out to be a problem with Scale-Out File Server incompatibility (or partial compatibility) with Provisioning Services. Therefore, until this issue is resolved and PVS becomes SOFS aware, at this time I am not recommending Scale-Out File Servers for PVS vDisk stores. If you are going to use SOFS, be sure to use a Maintenance Store as described above. Otherwise, you can certainly still use Windows Server 2012 R2 file servers, but they should be configured traditionally, in an Active/Standby fashion.

If you have any thoughts on how to make the Scale-Out File Server role more reliable and better performing for PVS vDisk stores, please leave a comment below!

As always, if you have any questions, comments, or just want to leave feedback, please do so below. Thanks for reading!

Have you also looked at the following post?

http://blogs.technet.com/b/josebda/archive/2013/10/30/automatic-smb-scale-out-rebalancing-in-windows-server-2012-r2.aspx

Want to check out.

Get/Set-SmbClientConfiguration

Get/Set-SmbClientConfiguration

You can disable mulitchannel using those commands also.

Are you also disabling SMB 1.0 via PowerShell? Making sure file share tuning is in place? What is your network setup, 1Gb or 10Gb?

Following the logic of this article, I should be seeing similar results but I don’t. Today, I’m looking at a 45 – 60 minute merge on a XenApp 6.5 ~50 GB image. The CIFS store is a NetApp filer with PVS 6.1–everything is 2008 R2. Granted, this setup is a bit different but shouldn’t I also be seeing 24 hour merges?

Brian,

I’ve appended the blog post with some recent findings based on your feedback.

Let’s continue the dialog, I’m curious if there’s anyone else out there experiencing similar behaviors.

–youngtech

Really great writeup – I’ve been thinking about moving over to SMB3-based PVS stores as part of my next architecture upgrade, so this couldn’t be more timely!

Awesome. Glad to help! Best of luck

–youngtech